Breaking the AI bubble: Big Tech plus AI equals economy takeover

Whether we like it or not, and despite tales of its powers being greatly exaggerated, the AI genie is out of the box. What does that mean, and what can we do about it?

In another twist of abysmal AI politics, OpenAI CEO Sam Altman just admitted that we are in an AI bubble, and AGI is losing relevance. You may find this baffling or hilarious, or you may be wondering where does that leave the AI influencer types.

But despite the absurdity, AI and the associated narrative have gotten way too important to dismiss. Connecting the dots to make sense of it all calls for long-standing experience in AI, engineering, business and beyond. In other words, for people like Georg Zoeller: a seasoned software and business engineer experienced in frontier technology in the gaming industry and Facebook.

Zoeller has been using AI in his work dating back to the 2010’s, to the point where AI is now at the core of what he does. Zoeller is the VP of Technology of NOVI Health, a Singapore-based healthcare startup, as well as the Co-Founder of the Centre for AI Leadership and the AI Literacy & Transformation Institute.

In a wide-ranging conversation with Zoeller, we addressed everything from AI first principles to its fatal flaws and its place in capitalism. Today, we discuss regulatory capture, copyright, the limits of the attention economy, the new AI religion, the builder’s conundrum, how the AI-powered transformation of software engineering is a glimpse into the future of work, AI literacy and how to navigate the brave new world.

OpenAI’s regulatory capture

Picking up on where we left off in part 1 of the conversation, Zoeller argues that OpenAI will never deliver the ROI promised to investors because their competition arrived at the same point spending 100X (or more) less. The underwhelming release of GPT5 seems to support this thesis, prompting Altman to backpedal. But, Zoeller goes on to add, in a way that does not matter:

“When you invest in the right people, you get regulatory capture in the US. You have the president of the United States host your fundraisers, you have the vice president of the United States go to Paris and to the AI summit and dance the no safety dance.

The chickens will come home to roost because it is backed by the United States government. It owns the United States government. We know that a lot of money is flowing into these companies now”.

Zoeller points out that the embrace of the US government with the AI bros is so tight, that it even includes feeding the social media histories of every single person who crosses over the border into Grok and getting an assessment.

It’s an entirely non transparent process and the technology is not explainable. That would be a rights violation in every normal country, but we’re not in a normal scale anymore. To wit, whether we like it or not, the technology is already transforming our economy and society.

Moving fast and breaking copyright

Unregulated use of AI has broken copyright, Zoeller argues. There are numerous reports of AI-generated copies of original content flooding marketplaces like Amazon. Creators put a lot of time and effort, only to have their work stolen before their eyes – same content, different words.

“The people who made those on the cheap for three dollars of ChatGPT, never spend any money [creating]. They’re spending the money on advertisement. The more volume there is, the only thing that matters is: will anyone actually see my product?

As someone who created a book or an app, you will always be at a disadvantage towards the grifters who take what you did. They are pushing the app or the book out there, with social media amplification and paid ad spend”, Zoeller notes.

Zoeller does not have a rosy view of copyright. He believes copyright was created to incentivize creators to continue to create, not because the world liked artists, but because the world liked publishers. Publishers lobbied hard, and they had a lot of money and power. Now copyright is broken, not just for books, but for the Internet at large.

Many of these models are trained on Web 2.0 concepts such as Stack Overflow. Places like this, where every software engineer would go to check for answers to questions and share knowledge freely was a Web 2.0 phenomenon that no longer works in the world of AI, Zoeller thinks.

He also thinks that with the incentives system being broken, we can no longer expect that you just need to make the right app and people will come. Attention is the underlying primitive for everything, more valuable than money, because it represents opportunity. We have failed to regulate the attention economy, and surrendered full control of all attention to a handful of platforms who will benefit massively from AI.

The limits of the attention economy

Facebook isn’t giving a Manhattan project’s worth of AI away for free because they love open source, Zoeller opines. They’re doing it because accelerating content creation means more and more content on the supply side. This means creators need a platform, and they have to pay to get through the door to customers.

The public market on the digital economy is mediated by a handful of companies. The cost of user acquisition for SMEs is often hundreds of dollars per user. This is a silent tax on every item sold, because advertisement is baked into the cost of every product. AI is now commoditizing knowledge economy digital products, just like the industrial revolution commoditized physical goods.

“We have a bit of a rosy view of industrialization because we all have cars and fancy things in our homes that previously were limited to rich people. But the digital economy is already commoditized. You can play the most successful games in the world for free. For fifteen dollars a month, you can get as many videos and TV shows and music as you want.

So the reality is we’re not even going to see a supply side expansion because we are heavily limited by attention. People just don’t have more time to spend on their phones, time to play more games or watch more movies”, Zoeller said.

The AI religion

Governments, Zoeller points out, are careful not to regulate the technology out of fear of missing out on that magical growth that it might deliver. But we’ve reached a dead end, because the only outlet left for growth is automating, i.e. removing, jobs. That is redistributing, not growing GDP.

Big tech companies, Zoeller relates, always talk about the best things that may happen. That forces regulators to weigh present current negative effects to untold future riches: “No one has the guts to walk away from growth in a very growth constrained world, because we are just at that point where capitalism is kinda running out of growth”.

The techno-optimist scenarios claim that fueling AI will enable the world to take on its biggest issues, such as climate change, and create abundance for everyone. Zoeller calls this out on both technical and social grounds.

On the technical front, Zoeller points out that LLMs are hitting a wall. All the content in the world, every book that’s ever been written, is probably already in the training corpora of frontier LLMs. But there’s no scenario where that turns into superhuman intelligence.

“You want the answer to climate change? It’s already in there. We know the answer. We just don’t like it. We don’t like that we have to cut down carbon emissions.

Technology has become a bit of a religion. It’s like Latin, the high priest of growth. No one wants to fight that, but it’s nonsense. Where does that leave us? That’s fundamentally mediated by your view of the world”, Zoeller says.

The builder’s conundrum

Having worked with AI as well as having years of experience in the gaming industry, Zoeller uses this as an example of the conundrum that people are faced with. Hype and under-appreciation exist at the same time.

Zoeller thinks that the gaming industry is toast. Not because the technology can replace all creativity, but because it takes a large number of jobs in that industry and wipes them out.

3D modeling is no longer going to be a thing for most people because the technology will be able to do that. It went from not being able to draw hands in images to videos that are close to indistinguishable from reality pretty soon.

It’s only a matter of time until good storytellers get their hands on it and integrate it into their process. Zoeller thinks an AI native game studio is possible today. But the technology is moving so fast that in six months or a year it would be completely different.

“We’re in a weird situation where it is too early, month over month, to dive into the technology and really build with it. But you should dive into the technology to get familiar with it and to get that sense of when it will plateau, when will be the right time to build.

The game industry is toast because you cannot invest in anything. We know that in six months or a year, there will be that magical point at which it starts really making sense. Before that, you’re mostly doing R&D that everyone else gets for free from open source.

I would wager there’s other industries that are in that same bucket. At the same time, when the technology interfaces with the real world, the hype is completely off base, especially around agents – it’s just not working”, Zoeller said.

AI is transforming software engineering

Zoeller also shared his experience working in a critical domain such as healthcare. The unreliability of LLM-based AI renders it unfit for such applications. And yet, people have to work with it. It’s initially scary and it will make you feel almost demotivated, as Zoeller puts it.

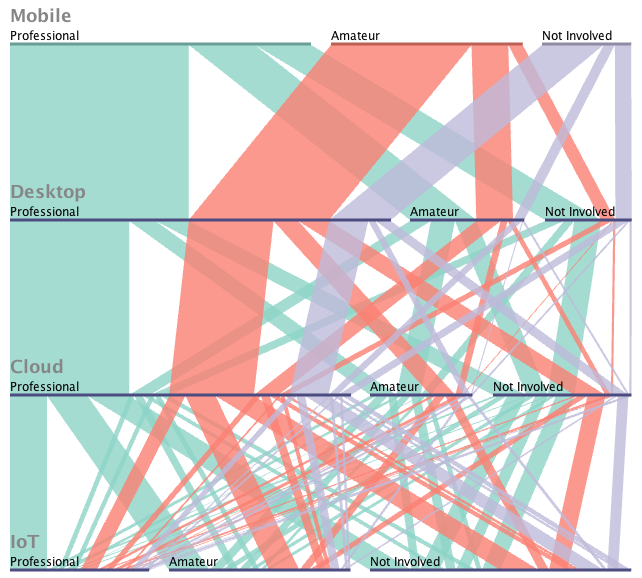

For example, software engineering becomes something different. Human coders are relegated to code reviewers, and reviewing code written by a machine that oscillates between making stupid errors and writing brilliant code is tedious.

Zoeller acknowledges that for some scenarios AI coding is so fast that coding by hand no longer makes sense. However, the problem is that AI coding brings a radically different aspect to software engineering, and not necessarily a good one. It introduces nondeterminism in the abstraction layers of software engineering.

Software engineering has evolved from working in low-level assembly languages to progressively higher levels of abstraction. There are many layers of abstraction now, meaning lots of complexity as well. But these layers are all deterministic, therefore they can be tested.

One set of inputs produces another set of outputs in a reliable way. That’s a precondition for testing, which is a big, hugely important part of the software engineering discipline.

“You can drill down all the way to the microprocessor to find whatever the bug is in the software and make it work. But the moment you add GenAI to it, it’s game over – because GenAI is nondeterministic. You can have one input, and it can have infinite outputs. And you can test a hundred times and the hundredth and first time, it’s wrong.

That might not sound terrible. But when you have ten thousand patients, a 99% success rate is not good enough. This is massively underestimated. It’s something you need to teach, because testing doesn’t work anymore. You have to switch to observability in order to keep your software running safely”, Zoeller points out.

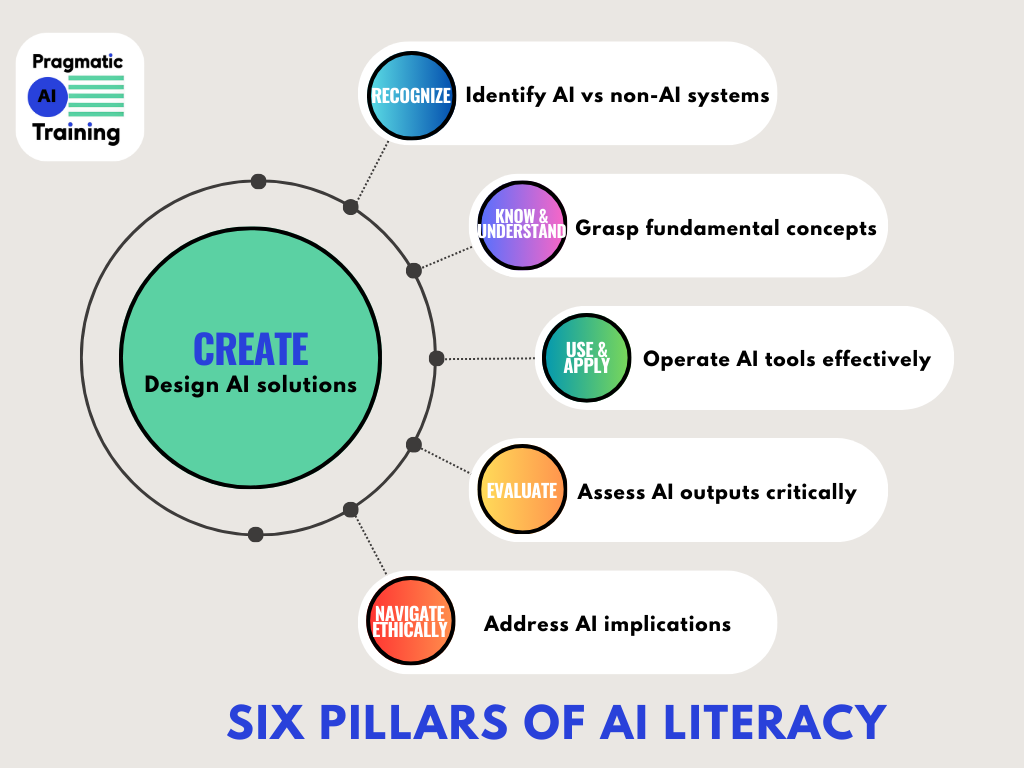

AI Literacy

Either way, even for people who feel that it’s worthwhile pushing back, Zoeller’s message is clear: you still need to learn the technology. You can’t be credible unless you have hands-on exposure.

So then the question is, what’s the best way to do that? In other words, what is the best path towards AI Literacy? AI Literacy is an EU AI Act requirement as of 2025. This means that every organization that uses AI in one way or another should ensure AI Literacy for its people.

Zoeller is involved in AI Literacy programs as well, and he believes most educational institutions are not fit for purpose. The frontier nature of the technology is incompatible with their internal bureaucracy. Plus, Zoeller notes, the academic peer review system is deeply problematic at this point – sidestepped by Arxiv and hijacked by PR and AI slop.

To be up to date and useful, AI education requires educators who are using and experimenting with the technology and also keep up with the latest research. This, of course, is a full time job, and most of us can’t do that.

A typical example is so-called prompt engineering. Most of the educational material available is focused exclusively on ChatGPT, which is already a problem. But it gets worse, because most of it is also outdated and wrong by now. The models have changed, so the early techniques are no longer useful.

What you have to teach people, Zoeller emphasizes, is the fundamentals that help connect how this technology works with their own experience. Things such as the first principles of AI, how transformers work, what types of use cases lend themselves to AI, and what are the pitfalls.

Zoeller is focused on AI Literacy for executives and line managers. The reason, he says, is because people have to go through a trough of disillusionment realizing what AI can mean for their organization. He finds it doesn’t help to expose people with no agency to that knowledge. What does help is thoughtful change management and compliance policies.

Big Tech plus AI equals economy takeover

Whether you agree with Zoeller’s takes or not, there’s one thing on which most of us will agree on: it’s all moving at superhuman speed, and nobody is really able to keep up anymore. Zoeller refers to this as “a singularity”, although not the kind people usually think of in the context of AGI:

“Singularity is that point when progress goes so straight up that you can no longer look behind what is happening the next day or what is behind the curve. We’re already here. Not because the technology is so fast yet – there’s still room to go, unfortunately – but because the ability of our institutions, our governance organizations is vastly outstripped.

I do believe that is intentional, because I worked in in Big Tech. What was sometimes a drawback for us, our six month performance cycles for example, is now a plus. These companies have realized that, literally, they are incapable of running projects that are longer than six months because of the incentive system. And that is good, because you cannot run projects longer than six months right now.

If you’re planning a project that takes longer than six months in the digital space right now, you are saying – I can predict where AI is going to be in a year, and that it’s not gonna make all my investments obsolete”.

Zoeller thinks nobody is capable of such a prediction, because Big Tech companies are intentionally accelerating technology to keep the rest of the economy vulnerable to them. The rest of the economy is either going into the wrong adventure or paralyzed, as he puts it.

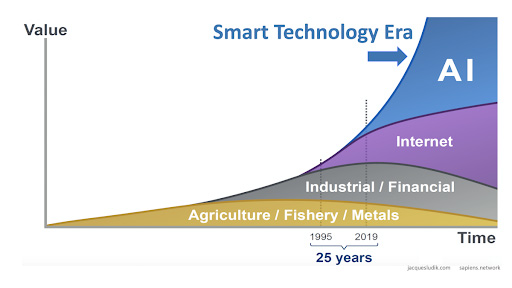

AI is not your average disruptive innovation

A typical argument used against AI skepticism is that this is the usual cycle of technological disruption: in due time, the dust will settle, we’ll figure it all out, and new jobs will be created that we can’t even imagine at this time.

Zoeller, who also happens to be teaching a university class on this, disagrees. What’s different this time is speed, he says. Unlike infrastructure for previous technologies such as electricity or automobiles, which took a long time to deploy physically, the infrastructure for AI is digital and it’s already there – APIs, apps, open source, GPUs, the cloud.

People, he argues, are not very good at adopting new technologies – they have to be pushed. It’s often not pretty, but time makes all the difference in mitigating the impact on society. Time is something we don’t have this time around.

But that’s not the only thing that’s different about AI, he adds. To illustrate this point, Zoeller turns again to software. He refers to software as “a machine that automates a specific task”. AI represents the ability to print the machine, and it’s not created by a human anymore.

“When you look at the properties of the transformer, what it basically comes down to is – if there is enough data available about the output of a profession, and there are patterns in the profession, and enough volume, it can be automated. The machine can be printed.

So when people say we’ve always figured out new jobs, that is alright. But we’ve never faced a technology that can automate any job that does have patterns. So I find these appeals to history not very helpful”, Zoeller said.

The brave new AI world

Software engineering is among the first professions to be disrupted, because there’s abundant data that can be used for training, and the output is testable. Ironically, software engineers are now on the receiving end of automation they helped bring about. Extrapolating this, Zoeller thinks we’re likely to see a future in which people first help train their replacements, then are relegated to quality assurance roles.

Where does that leave us? Zoeller is not optimistic. In fact, he clearly spells it out – we’re running another industrial revolution, but this one has new properties that make it extremely dangerous for society. Not because rogue AI may take over, but because it may lead to a full system collapse – or rather, we may add, accelerate the path we’re already on.

“Taking all of this together, no. I don’t think we can say we’re gonna be okay. We’re gonna have to work for being okay, and we’re gonna need leadership, to be okay”, Zoeller concluded.